Django is a battle-tested framework that simplifies web development, but deploying a Django application goes far beyond just writing clean code or spinning up a server.

A truly production-ready deployment requires careful planning—handling security, scalability, database performance, and server reliability to ensure your app runs smoothly under real-world conditions.

In this article, we'll explore several best practices to make your life easier when deploying Django applications with Docker Compose.

1. Choosing the right Django Docker boilerplate

When containerizing a Django application, starting with a well-structured boilerplate can save time, reduce configuration errors, and improve maintainability. While you can build a custom setup from scratch, using a proven template ensures best practices are followed.

Cookiecutter-Django is one of Django applications' most widely used boilerplates. It includes:

- Docker and Docker Compose support out of the box.

- Security best practices, including strong default settings and HTTPS enforcement.

- Built-in support for PostgreSQL, Redis, Celery, and Traefik.

- Preconfigured environment variables using

.envfiles for secure configuration management. - Comprehensive documentation and an active community.

If you are looking for a middle ground between a full-fledged boilerplate and a DIY setup, Nick JJ’s Docker Django Example is a great resource.

It provides a leaner alternative that maintains essential functionality without unnecessary complexity. This approach provides a clean, production-optimized setup with integrated PostgreSQL, Gunicorn, and Nginx, while allowing greater flexibility in your configuration decisions.

While using a prebuilt boilerplate is convenient, you might prefer to start with a minimal setup and add features incrementally. A good strategy is to study a well-structured repository (such as Cookiecutter-Django) and extract only the needed parts. This helps you maintain clarity and avoid unnecessary complexity.

2. Leverage Environment Variables for Configuration

Hardcoding configuration values directly into Django's settings.py can make deployments inflexible and pose security risks. Instead, using environment variables ensures that your application adapts to different environments while keeping sensitive data out of version control.

Docker Compose simplifies environment variable management using .env files and the env_file directive, making it easy to maintain consistent configurations across development, staging, and production setups.

Rather than defining credentials and settings in the source code, Django should retrieve them dynamically from the environment.

This approach provides a few key benefits:

- Configuration changes do not require modifying code, allowing the same codebase to be used across multiple environments.

- Default values ensure that missing variables do not break the application.

- Secrets such as API keys and database credentials remain outside the repository, reducing the risk of leaks.

Instead of manually passing environment variables, store them in a .env file:

Then, reference them in docker-compose.yml:

This setup loads the environment variables automatically when running containers, ensuring that each environment remains properly configured without code changes.

Providing a .env.example file in your repository helps other developers quickly understand the required environment variables. This file should include placeholders or safe defaults but never contain actual secrets.

To prevent accidental leaks, .env files should be excluded from version control using .gitignore:

For production deployments, storing secrets in a dedicated secrets manager such as AWS Secrets Manager, HashiCorp Vault, or Docker secrets adds an extra layer of security.

Using a package like django-environ further improves environment variable management by allowing structured parsing of values:

This approach ensures that environment variables are handled consistently and securely across all stages of deployment.

3. Implement a robust health check system

A reliable health check mechanism ensures that your Django application remains operational and can recover automatically in case of failures.

In containerized environments, health checks allow orchestration tools to monitor application health and take corrective actions like restarting a failing container.

Docker provides built-in health checks that periodically verify if a service is running as expected. Django applications can expose a dedicated health check endpoint to confirm critical dependencies like the database and cache are accessible.

Docker's healthcheck directive in docker-compose.yml allows containers to self-report their status. A simple health check can be implemented by regularly querying a Django endpoint.

This configuration checks the application's health every 30 seconds, fails if no response is received within 5 seconds, and allows up to 3 retries before marking the container as unhealthy. When a container becomes unhealthy, Docker can restart it or trigger alerts in a monitoring system.

A dedicated Django view can perform essential system checks, such as database connectivity and cache availability.

This endpoint returns an HTTP 200 response if the database and cache are accessible or a 503 error if any dependency fails.

You can then add the new health check view to urls.py so Docker and monitoring tools can access it.

Health checks matter because they ensure critical dependencies are available. For production environments, consider restricting access to the health check endpoint or providing a summarized version to avoid exposing sensitive system details.

4. Optimize static and media file handling

Handling static and media files efficiently is essential in containerized Django deployments. Unlike traditional setups, where files can be stored persistently on disk, Docker containers are ephemeral, meaning files created during runtime are lost when the container restarts.

To ensure that static and media files are properly stored and served in a Dockerized Django application, it's important to configure persistent storage and optimize file serving for performance.

Docker volumes allow media uploads to persist across container restarts while keeping static files accessible for efficient serving.

A typical docker-compose.yml configuration includes dedicated volumes for both:

This setup collects static files in the application directory, which can be built into the image. Media files (uploads) are stored in a Docker volume (media_data) to persist across container restarts. Nginx serves media files directly, reducing the load on Django.

Django's settings.py should match the Docker volume structure to ensure that static and media files are correctly stored and served:

This configuration allows Django to use environment variables to determine storage paths, making it adaptable to different environments.

To ensure that static files are always up to date, run collectstatic automatically when the container starts in production:

This ensures that static files are collected and stored in the correct location before the application starts serving requests.

This approach works because it ensures media files persist across restarts using Docker volumes, improves performance by letting Nginx handle static and media file serving, and keeps the setup flexible for local development and production environments.

Consider using a CDN for static files and object storage for larger applications like AWS S3 for media files to enhance scalability and reduce server load.

5. Set up a robust database migration strategy

Database migrations are essential to Django development, allowing you to update schemas without losing data. In Dockerized environments, handling migrations correctly ensures smooth deployments while avoiding race conditions and application failures.

A structured migration strategy prevents issues where the application starts before the database is fully ready or multiple containers attempt to run migrations simultaneously.

Docker Compose allows service dependencies to be set up to make sure the database is healthy before Django starts. Using the depends_on directive with a health check ensures the application does not start prematurely.

This setup ensures that the database is healthy before Django attempts to connect, the application waits for PostgreSQL instead of failing immediately, and migrations do not run against an unavailable database.

Instead of running migrations inside the web container, a separate service can apply them before the application starts.

This ensures that migrations run separately and complete before the web container starts, the web service does not need database write access for schema changes, and there is better control over execution order during deployments.

Running unnecessary migrations increases downtime. A simple script can check if new migrations are required before applying them.

This ensures migrations only run when necessary, reducing unnecessary operations.

A structured migration approach matters because it ensures database readiness before migrations are applied, prevents multiple containers from running migrations simultaneously, reduces unnecessary downtime by checking for pending migrations, and keeps the application startup process smooth and predictable.

For zero-downtime deployments, consider blue-green deployment strategies where migrations run on a separate instance before switching traffic to the updated application.

6. Configure asynchronous task processing with Celery

Django applications often require background processing for long-running tasks such as sending emails, processing file uploads, or handling scheduled jobs. Running these tasks synchronously within Django can block requests and slow down response times.

Using Celery with Docker allows background tasks to be executed asynchronously, improving application performance and scalability.

Celery requires a message broker (such as Redis) to queue tasks and workers to process them asynchronously. Define these services in docker-compose.yml:

This setup ensures that Celery workers start only after Redis is available, Redis handles task queuing allowing Django to offload processing, and workers can be independently scaled, adding more instances when needed.

Celery settings should be configured via environment variables to maintain flexibility across different environments.

Django's settings.py should also define the broker and result backend:

For scheduled tasks, Celery Beat provides periodic execution similar to cron jobs. Define a new service for Celery Beat in docker-compose.yml:

This allows tasks to be scheduled automatically without manual intervention.

Celery improves performance because background tasks run asynchronously, preventing request blocking; workers can be scaled independently to handle load spikes; task queues ensure reliability, even during high traffic; and graceful shutdown allows ongoing tasks to complete before stopping.

Consider monitoring Celery workers with Flower and centralizing logs for production use for better task tracking and debugging.

7. Implement a scalable logging strategy

Logging is essential for monitoring, debugging, and gaining insights into Django applications running in production. Log management must be structured in a Dockerized environment to ensure that logs remain accessible, easily aggregated, and properly retained.

By configuring Django and Docker to handle logs effectively, you can streamline troubleshooting and integrate with centralized log management tools.

Django's built-in logging framework can be configured to output logs in different formats, allowing structured JSON logs in production and more readable logs in development.

This setup ensures that logs are written to standard output (following Docker's best practices), production logs are formatted in JSON (making them easier to process with log management tools), and log levels are configurable via environment variables (allowing dynamic changes without modifying the code).

By default, Docker captures logs from the application and stores them in a local file. To prevent logs from consuming too much disk space, enable log rotation in docker-compose.yml:

This configuration restricts log size to 10MB per file and retains the last 3 log files, preventing disk overflow.

For local development, you can override the log settings using docker-compose.override.yml:

This ensures that logs remain lightweight and manageable during development.

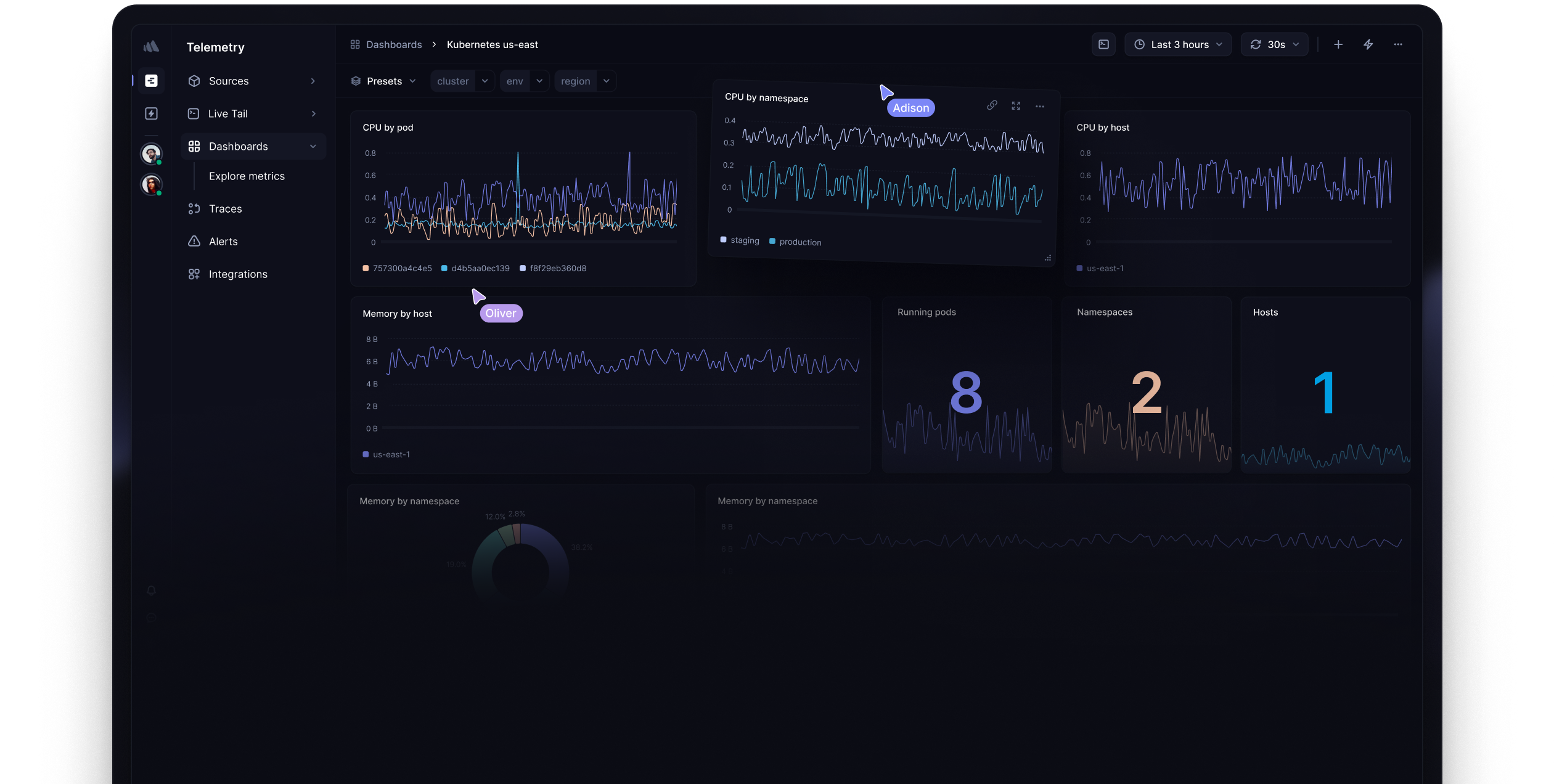

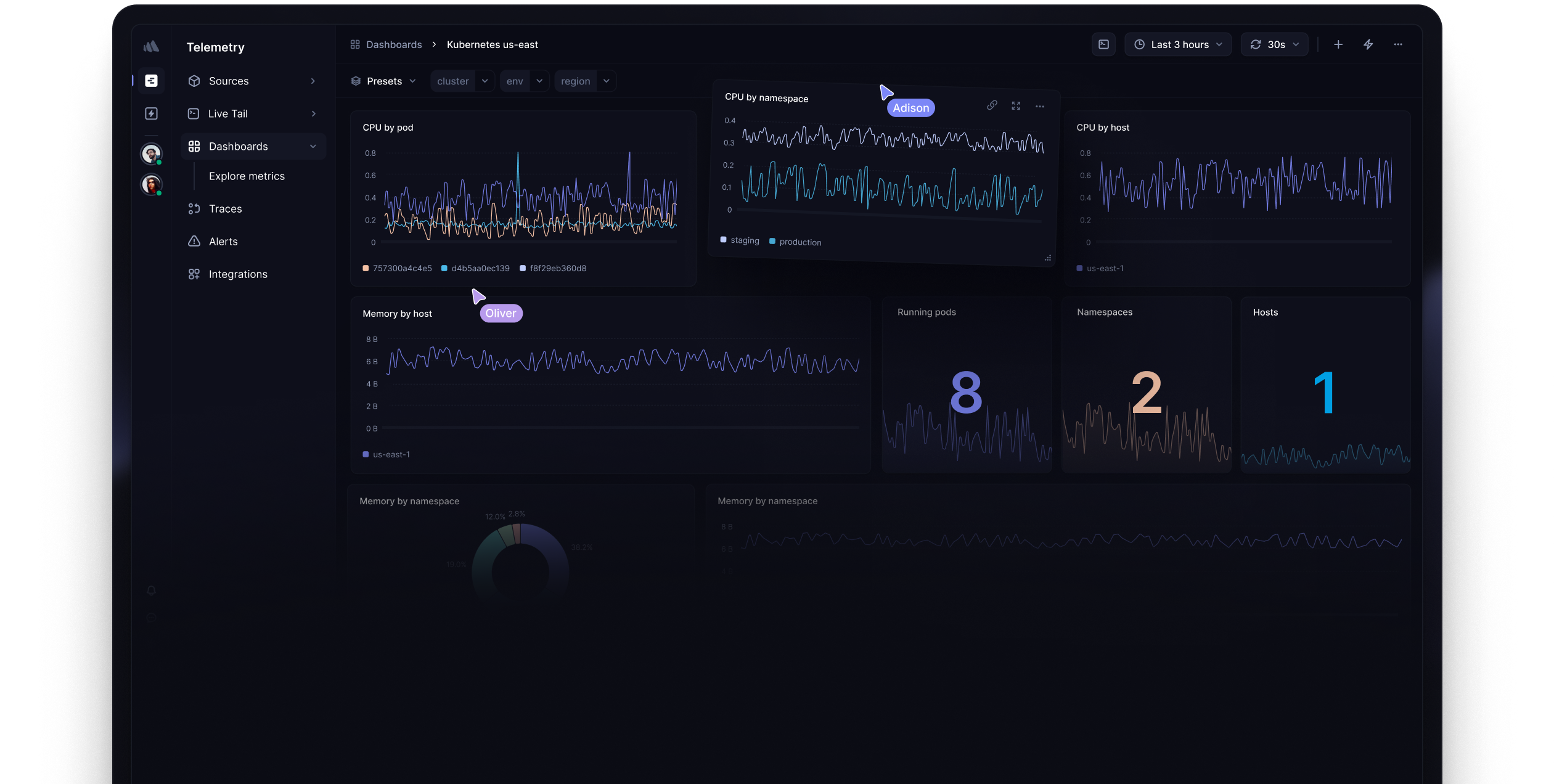

For production deployments, storing logs locally is not scalable. Instead, integrate with a centralized logging system such as Better Stack.

A centralized logging solution makes monitoring trends and errors across multiple services easier, setting up alerts for critical issues and analyzing application performance in real-time.

A well-structured logging system ensures that debugging and monitoring remain efficient as your Django application scales.

Final thoughts

Deploying a Django application in a containerized environment involves more than writing clean code. A production-ready deployment requires careful attention to security, scalability, database reliability, and efficient resource management to ensure long-term stability.

Applying the strategies and best practices covered in this guide equips a Django project to handle real-world traffic, scale efficiently, and remain resilient in production environments.